A Comprehensive Guide to Xavier Initialization in Machine Learning Models

Introduction

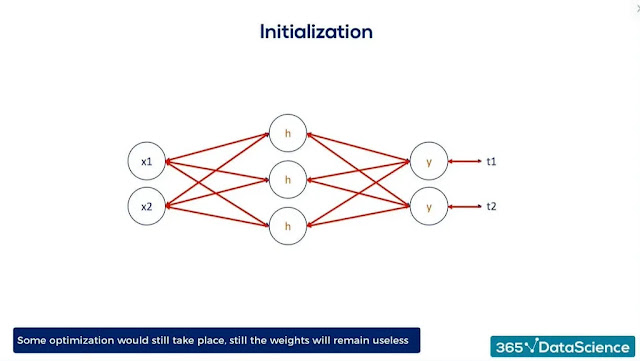

Xavier initialization is a weight initialization technique used in neural networks to improve training performance and prevent vanishing or exploding gradients. In this comprehensive guide, we will explore the basics of Xavier initialization, its benefits, and how to implement it in machine learning models.

What is Xavier Initialization?

Xavier initialization, also known as Glorot initialization, is a weight initialization technique used in neural networks. It is named after its creator, Xavier Glorot, who introduced the technique in a research paper in 2010. Xavier initialization is designed to provide an effective initialization for the weights in a neural network, which can improve the training performance of the network.

The key idea behind Xavier initialization is to ensure that the variance of the outputs of each layer in the network is roughly equal to the variance of its inputs. This is achieved by initializing the weights of each layer randomly from a Gaussian distribution with zero mean and a variance of:

variance = 2 / (n_in + n_out)

where n_in is the number of input neurons and n_out is the number of output neurons in the layer.

Benefits of Xavier Initialization

Xavier initialization offers several benefits for machine learning models. These benefits include:

- Improved Training Performance: Xavier initialization can help prevent vanishing or exploding gradients, which can occur when the weights in a neural network are initialized with very small or very large values. This can improve the training performance of the network, allowing it to converge more quickly and accurately.

- Better Generalization: Xavier initialization can also help improve the generalization performance of the network, allowing it to perform well on new, unseen data.

- Faster Training: By providing an effective initialization for the weights in a neural network, Xavier initialization can help speed up the training process, allowing the network to be trained more quickly.

Implementing Xavier Initialization

Implementing Xavier initialization in a machine learning model is relatively straightforward. Here are the basic steps:

- Choose a Random Initialization Method: Xavier initialization is a type of random initialization, so you will need to choose a random initialization method for the weights in your model. Some common methods include uniform initialization and normal initialization.

- Calculate the Variance: Once you have chosen a random initialization method, you can calculate the variance for each layer in the neural network using the formula:

variance = 2 / (n_in + n_out)

where n_in is the number of input neurons and n_out is the number of output neurons in the layer.

- Initialize the Weights: Finally, you can initialize the weights in each layer of the neural network using the selected random initialization method and the calculated variance.

Example Implementation

Here is an example implementation of Xavier initialization in a neural network using TensorFlow:

import tensorflow as tf

from tensorflow.keras.initializers import GlorotUniform

# Define the neural network architecture

model = tf.keras.Sequential([

tf.keras.layers.Dense(128, activation='relu', input_shape=(784,), kernel_initializer=GlorotUniform()),

tf.keras.layers.Dense(10, activation='softmax')

])

# Compile the model

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

In this example, we define a neural network with two layers: a fully connected layer with 128 neurons and a softmax output layer with 10 neurons. We use the GlorotUniform initializer to initialize the weights in the first layer according to Xavier initialization.

Conclusion

Xavier initialization is a powerful weight initialization technique that can improve the training performance and generalization performance of machine learning models. By ensuring that the variance of the outputs of each layer in a neural network is roughly equal to the variance of its inputs, Xavier initialization can help prevent vanishing or exploding gradients and speed up the training process. With this comprehensive guide, you should have a solid understanding of Xavier initialization and how to implement it in machine learning models.

.jpg)

Comments

Post a Comment